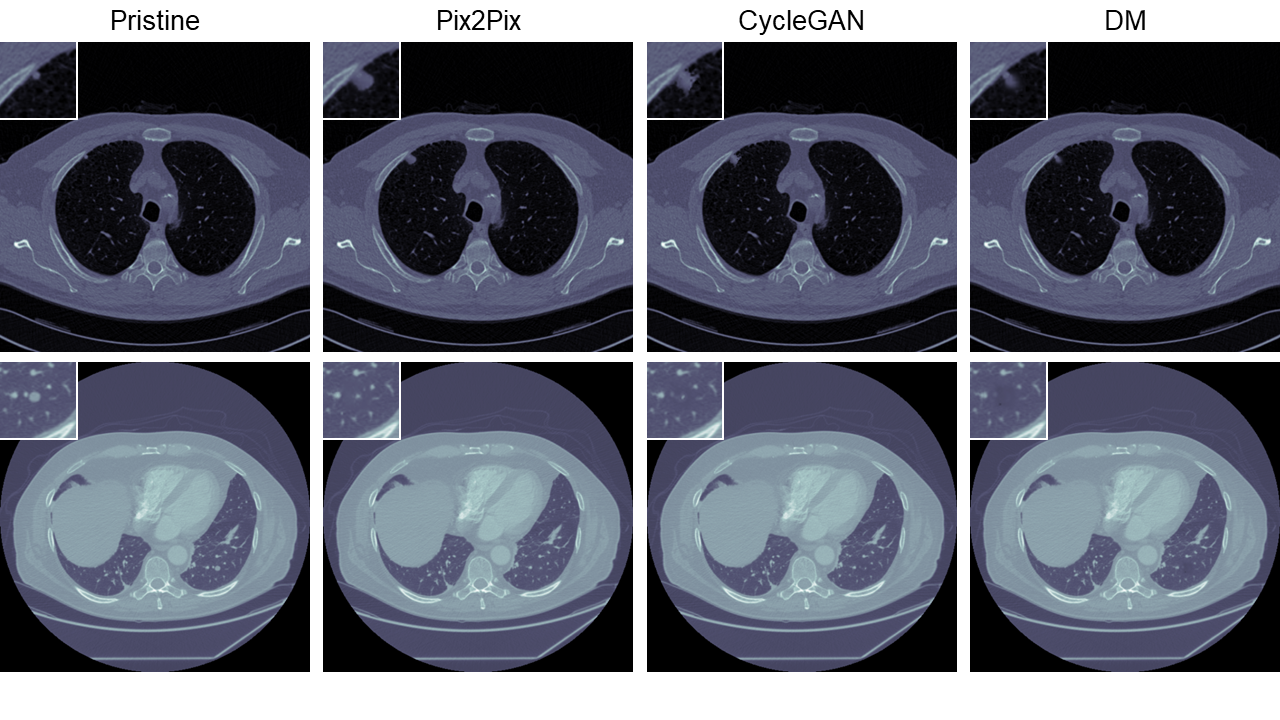

The ability to detect manipulated visual content is becoming increasingly important in many application fields, given the rapid advances in image synthesis methods. Of particular concern is the possibility of modifying the content of medical images, altering the resulting diagnoses. Despite its relevance, this issue has received limited attention from the research community. One reason is the lack of large and curated datasets to use for development and benchmarking purposes. Here, we investigate this issue and propose M3Dsynth, a large dataset of manipulated Computed Tomography (CT) lung images. We create manipulated images by injecting or removing lung cancer nodules in real CT scans, using three different methods based on Generative Adversarial Networks (GAN) or Diffusion Models (DM), for a total of 8,577 manipulated samples. Experiments show that these images easily fool automated diagnostic tools. We also tested several state-of-the-art forensic detectors and demonstrated that, once trained on the proposed dataset, they are able to accurately detect and localize manipulated synthetic content, including when training and test sets are not aligned, showing good generalization ability.

Bibtex

@article{zingarini2023m3dsynth,

author={Giada Zingarini and Davide Cozzolino and Riccardo Corvi and Giovanni Poggi and Luisa Verdoliva},

title={M3Dsynth: A dataset of medical 3D images with AI-generated local manipulations},

journal={arXiv preprint arXiv:2309.07973},

year={2023}

}

Acknowledgments

We gratefully acknowledge the support of this research by the Defense Advanced Research Projects Agency (DARPA) under agreement number FA8750-20-2-1004. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of DARPA or the U.S. Government.

This work has also received funding by the European Union under the Horizon Europe vera.ai project, Grant Agreement number 101070093, and is supported by a TUM-IAS Hans Fischer Senior Fellowship and by PREMIER funded by the Italian Ministry of Education, University, and Research within the PRIN 2017 program.